When we think of deepfakes, we tend to imagine AI-generated people. This might be lighthearted, like a deepfake Tom Cruise, or malicious, like nonconsensual pornography. What we don’t imagine is deepfake geography: AI-generated images of cityscapes and countryside. But that’s exactly what some researchers are worried about.

Specifically, geographers are concerned about the spread of fake, AI-generated satellite imagery. Such pictures could mislead in a variety of ways. They could be used to create hoaxes about wildfires or floods, or to discredit stories based on real satellite imagery. (Think about reports on China’s Uyghur detention camps that gained credence from satellite evidence. As geographic deepfakes become widespread, the Chinese government can claim those images are fake, too.) Deepfake geography might even be a national security issue, as geopolitical adversaries use fake satellite imagery to mislead foes.

The US military warned about this very prospect in 2019. Todd Myers, an analyst at the National Geospatial-Intelligence Agency, imagined a scenario in which military planning software is fooled by fake data that shows a bridge in an incorrect location. “So from a tactical perspective or mission planning, you train your forces to go a certain route, toward a bridge, but it’s not there. Then there’s a big surprise waiting for you,” said Myers.

The first step to tackling these issues is to make people aware there’s a problem in the first place, says Bo Zhao, an assistant professor of geography at the University of Washington. Zhao and his colleagues recently published a paper on the subject of “deep fake geography,” which includes their own experiments generating and detecting this imagery.

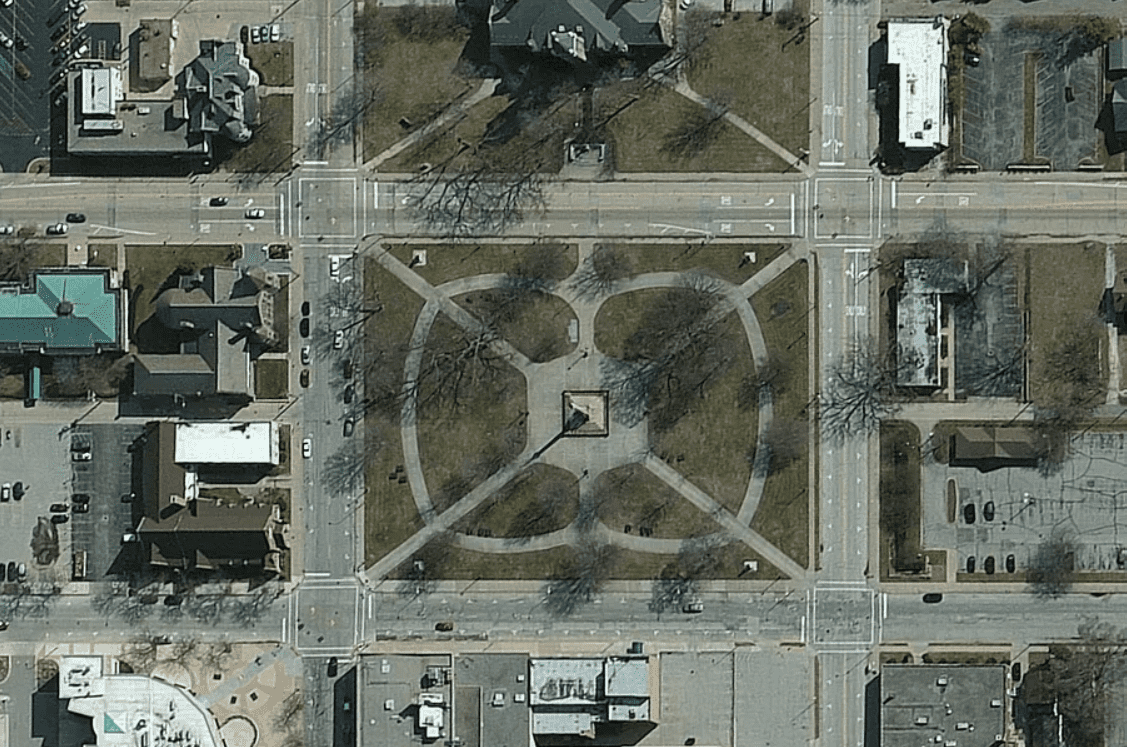

:no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/22472732/Zhao_deepfake_geography_fig_4.png)

The aim, Zhao tells The Verge over email, “is to demystify the function of absolute reliability of satellite images and to raise public awareness of the potential influence of deep fake geography.” He says that although deepfakes are widely discussed in other fields, his paper is likely the first to touch upon the topic in geography.

“While many GIS [geographic information system] practitioners have been celebrating the technical merits of deep learning and other types of AI for geographical problem solving, few have publicly recognized or criticized the potential threats of deep fake to the field of geography or beyond,” write the authors.

Far from presenting deepfakes as a novel challenge, Zhao and his colleagues locate the technology in a long history of fake geography that dates back millennia. Humans have been lying with maps for pretty much as long as maps have existed, they say, from mythological geographies devised by ancient civilizations like the Babylonians, to modern propaganda maps distributed during wartime “to shake the enemy’s morale.”

One particularly curious example comes from so-called “paper towns” and “trap streets.” These are fake settlements and roads inserted by cartographers into maps in order to catch rivals stealing their work. If anyone produces a map which includes your very own Fakesville, Ohio, you know — and can prove — that they’re copying your cartography.

“It is a centuries-old phenomenon,” says Zhao of fake geography, though new technology produces new challenges. “It is novel partially because the deepfaked satellite images are so uncannily realistic. The untrained eyes would easily consider they are authentic.”

It’s certainly easier to produce fake satellite imagery than fake videos of humans. Lower resolutions can be just as convincing and satellite imagery as a medium is inherently believable. This may be due to what we know about the expense and origin of these pictures, says Zhao. “Since most satellite images are generated by professionals or governments, the public would usually prefer to believe they are authentic.”

As part of their study, Zhao and his colleagues created software to generate deepfake satellite images, using the same basic AI method (a technique known as generative adversarial networks, or GANs) used in well-known programs like ThisPersonDoesNotExist.com. They then created detection software that was able to spot the fakes based on characteristics like texture, contrast, and color. But as experts have warned for years regarding deepfakes of people, any detection tool needs constant updates to keep up with improvements in deepfake generation.

For Zhao, though, the most important thing is to raise awareness so geographers aren’t caught off-guard. As he and his colleagues write: “If we continue being unaware of an unprepared for deep fake, we run the risk of entering a ‘fake geography’ dystopia.”