It’s relatively easy to extract personal information including face images, age, gender, and names from public screenshots of video meetings, according to Ben-Gurion University researchers. The coauthors of a newly published study say a combination of image processing, text recognition, and forensics enabled them to cross-reference Zoom data with social network data, demonstrating that meeting participants might be subject to risks they aren’t aware of.

As social distancing and shelter-in-place orders motivated by the pandemic make physical meetings impossible, hundreds of millions of people around the world have turned to video conferencing platforms as a replacement. (In April, Microsoft Teams, Zoom, and Google Meet passed 75 million, 300 million, and 100 million users respectively.) But as the platforms come into wide use, security flaws are emerging — some of which enable malicious actors to “spy” on meetings.

This latest work sought to explore the privacy aspects at play when attending Zoom conference sessions. The researchers first curated an image data set containing screenshots from thousands of meetings by using Twitter and Instagram web scrapers, which they configured to look for terms and hashtags like “Zoom school” and “#zoom-meeting.” They filtered out duplicates and posts lacking images before training and using an algorithm to identify Zoom collages, leaving them with 15,706 screenshots of meetings.

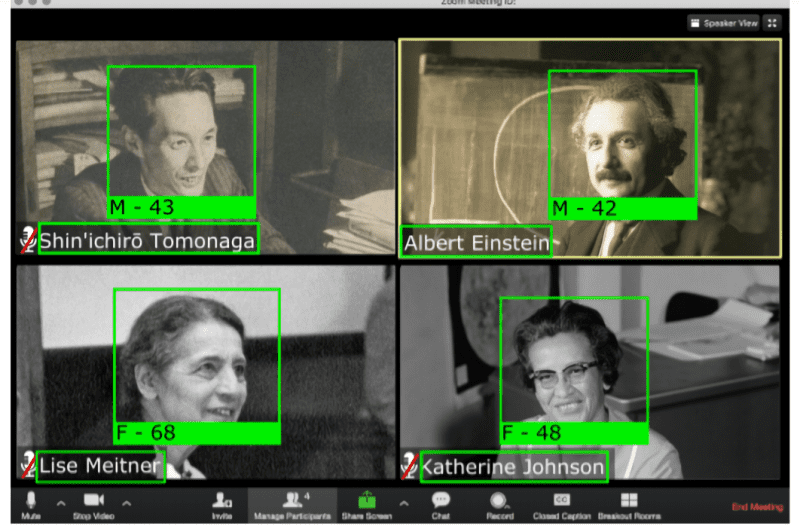

The researchers next performed an analysis of each Zoom screenshot beginning with facial detection. Using a combination of open source pretrained models and Microsoft’s Azure Face API, they say they were able to spot faces in images with 80% accuracy; detect gender; and estimate age (e.g., “child,” “adolescent,” and “older adult”). Moreover, they claim a freely available text recognition library allowed them to extract 63.4% of usernames from the screenshots correctly.

Cross-referencing 85,000 names and over 140,000 faces yielded 1,153 people that likely appeared in more than one meeting, as well as networks of Zoom users where all the participants were coworkers. According to the researchers, this illustrates that not only individuals’ privacy is at risk from data exposed on video conference meetings, but also the privacy and security of organizations.

“We demonstrate that it is possible to use data collected from video conference meetings along with linked data collected in other video meetings with other groups, such as online social networks, in order to perform a linkage attack on target individuals,” they wrote. “This can result in jeopardizing the target individual’s privacy by using different meetings to discover different types of connections.”

To mitigate privacy risks, the researchers recommend video conference participants choose generic pseudo-names and backgrounds. They also suggest that organizations inform employees of video conferencing’s privacy risks and that video conference operators like Zoom add “privacy” modes that foil facial recognition, like Gaussian noise filters.

“In the current global reality of social distancing, we must be sensitive to online privacy issues that accompany changes in our lifestyle as society is pushed towards a more virtual world,” the coauthors added.

It’s not the first time video conferencing platforms have found themselves the subject of privacy concerns. In early April, Zoom outlined a 90-day plan during which it would freeze new features to focus on security, spurred on by high-profile incidents. And Microsoft fixed a bug that made it possible for attackers to steal Teams account data.