Hailo, a startup developing hardware designed to speed up AI inferencing at the edge, today announced that it’s raised $60 million in series B funding led by previous and new strategic investors. CEO Orr Danon says the tranche will be used to accelerate the rollout of Hailo’s Hailo-8 chip, which was officially detailed in May 2019 ahead of an early 2020 ship date — a chip that enables devices to run algorithms that previously would have required a datacenter’s worth of compute. Hailo-8 could give edge devices far more processing power than before, enabling them to perform AI tasks without the need for a cloud connection.

“The new funding will help us [deploy to] … areas such as mobility, smart cities, industrial automation, smart retail and beyond,” said Danon in a statement, adding that Hailo is in the process of attaining certification for ASIL-B at the chip level (and ASIL-D at the system level) and that it is AEC-Q100 qualified.

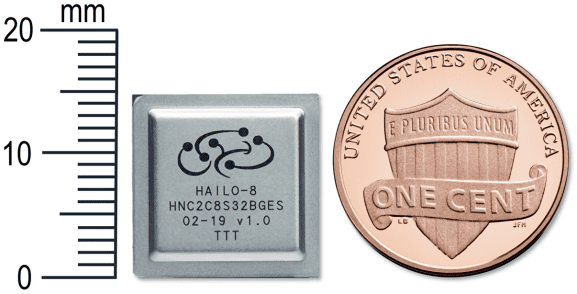

Hailo-8, which Hailo says it has been sampling over a year with “select partners,” features an architecture (“Structure-Defined Dataflow”) that ostensibly consumes less power than rival chips while incorporating memory, software control, and a heat-dissipating design that eliminates the need for active cooling. Under the hood of the Hailo-8, resources including memory, control, and compute blocks are distributed throughout the whole of the chip, and Hailo’s software — which supports Google’s TensorFlow machine learning framework and ONNX (an open format built to represent machine learning models) — analyzes the requirements of each AI algorithm and allocates the appropriate modules.

Hailo-8 is capable of 26 tera-operations per second (TOPs), which works out to 2.8 TOPs per watt. Here’s how that compares with the competition:

- Nvidia Jetson Xavier NX: 21 TOPs (1.4 TOPs per watt)

- Google’s Edge TPU: 4 TOPs (2 TOPs per watt)

- AIStorm: 2.5 TOPs (10 TOPs per watt)

- Kneron KL520: 0.3 TOPs (1.5 TOP per watt)

In a recent benchmark test conducted by Hailo, the Hailo-8 outperformed hardware like Nvidia’s Xavier AGX on several AI semantic segmentation and object detection benchmarks, including ResNet-50. At an image resolution of 224 x 224, it processed 672 frames per second compared with the Xavier AGX’s 656 frames and sucked down only 1.67 watts (equating to 2.8 TOPs per watt) versus the Nvidia chip’s 32 watts (0.14 TOPs per watt).

Hailo says it’s working to build the Hailo-8 into products from OEMs and tier-1 automotive companies in fields such as advanced driver-assistance systems (ADAS) and industries like robotics, smart cities, and smart homes. In the future, Danon expects the chip will make its way into fully autonomous vehicles, smart cameras, smartphones, drones, AR/VR platforms, and perhaps even wearables.

In addition to existing investors, NEC Corporation, Latitude Ventures, and the venture arm of industrial automation and robotics company ABB (ABB Technology Ventures) also participated in the series B. It brings three-year-old, Tel Aviv-based Hailo’s total venture capital raised to date to $88 million.

It’s worth noting that Hailo has plenty in the way of competition. Startups AIStorm, Esperanto Technologies, Quadric, Graphcore, Xnor, and Flex Logix are developing chips customized for AI workloads — and they’re far from the only ones. The machine learning chip segment was valued at $6.6 billion in 2018, according to Allied Market Research, and it is projected to reach $91.1 billion by 2025.

Mobileye, the Tel Aviv company Intel acquired for $15.3 billion in March 2017, offers a computer vision processing solution for AVs in its EyeQ product line. Baidu in July unveiled Kunlun, a chip for edge computing on devices and in the cloud via datacenters. Chinese retail giant Alibaba said it launched an AI inference chip for autonomous driving, smart cities, and logistics verticals in the second half of 2019. And looming on the horizon is Intel’s Nervana, a chip optimized for image recognition that can distribute neural network parameters across multiple chips, achieving very high parallelism.