If you read enough science news, you’ll know that there’s a long list of experiments attempting to “prove Einstein wrong.” None have yet contradicted his hallmark theory of relativity. But the latest effort to falsify his statements surrounding “spooky action at a distance” has gone truly cosmic.

Scientists have long performed tests demonstrating that the quantum concept of “entanglement” forces us to accept something that doesn’t make much logical sense. But in order to get around loopholes in previous iterations of the test, which are conducted fully here on Earth, scientists lately have hooked their experiments up to telescopes observing the cosmos.

“We’ve outsourced randomness to the furthest quarters of the universe, tens of billions of light years away,” David Kaiser, one of the study’s authors from MIT, told Gizmodo.

Let’s start at the beginning: Quantum mechanics describes the universe’s smallest particles as having a restricted set of innate properties, which are mostly a mystery to us humans until we measure them. The math of quantum mechanics introduces the idea that two particles can become “entangled,” so their joint properties must be described with the same mathematical machinery. But here’s the problem: If you separate these particles to opposite ends of the universe and measure them, they’ll maintain this eerie connection; you can still infer the properties of one particle by measuring the other.

Einstein, along with Boris Podolsky and Nathan Rosen, thought that one of two things could cause this “spooky action at a distance,” as Einstein described it. Either the particles somehow communicate faster than the speed of light, which Einstein’s theories demonstrated is impossible, or there was hidden information humans weren’t accessing that ensured particles took on these correlated values in the first place.

But John Stewart Bell theorized that hidden information could never accurately recreate what quantum mechanics forces the particles to do. Scientists have devised increasingly complex ways to test this theory since the 1960s.

These tests usually look rather similar. Scientists generate pairs of entangled photons, each with one of two polarization states—imagine that, viewed from a certain angle, both photons are either small vertical lines or horizontal lines. The photons, when entangled, will have the same polarization state—though which one, horizontal or vertical, is a mystery until the measurement. The scientists send the photons to two distant detectors that measure the photons from two angles: the angle from which the polarization and entanglement are visible, or a different angle (if the photons are viewed from this different angle, they become unentangled). Each detector lies in wait for the particles—which, if everything lines up, will produce a simultaneous blip. These simultaneous blips should occur more frequently for sets of entangled particles than sets of unentangled ones.

Some percentage of simultaneous blips above a certain threshold would prove Einstein, Podolsky, and Rosen wrong—it would demonstrate that there are no hidden variables in the laws of physics predetermining the particles’ identities.

But there’s a loophole—perhaps the apparatus influences the measurement, somehow, and forces the photons to carry the same polarization? In order to prevent this, scientists randomly switch the detector between the two measurement angles. Then comes the next loophole: What if the random-number generator determining the measurement angle isn’t really random; what if what we see as randomness has actually been predetermined by the laws of physics that brought humans to this point?

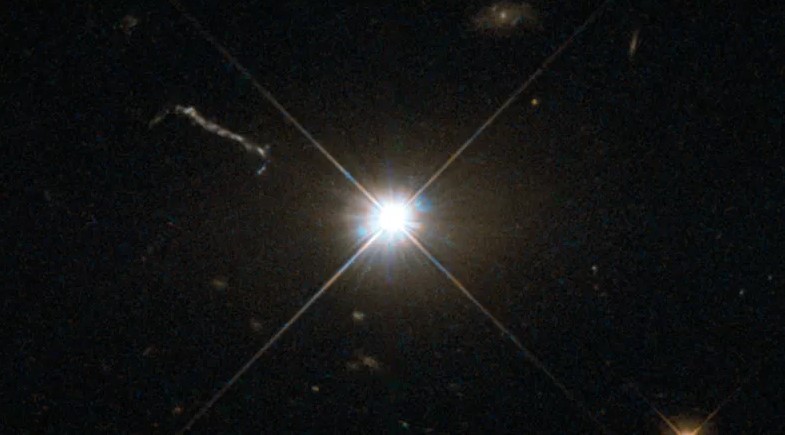

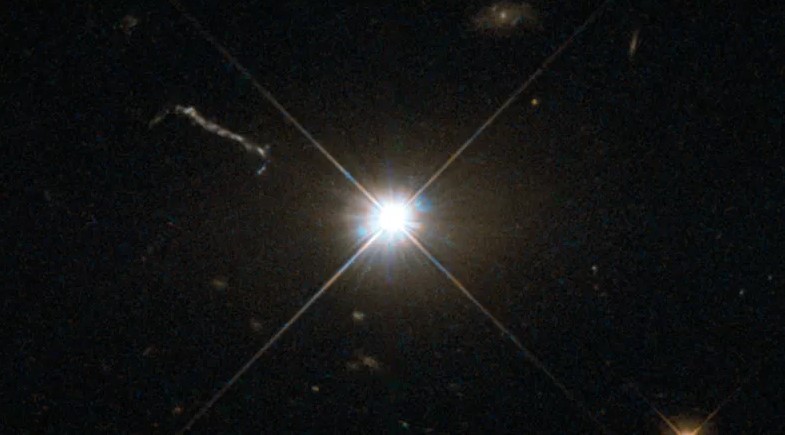

Two teams of scientists got around this problem by hooking their random-number generator up to a pair of telescopes. In the more dramatic case, the team including Kaiser worked from two telescopes on La Palma in the Canary Islands: the Telescopio Nazionale Galileo, pointing at bright light sources called quasars on one side of the sky that emitted their light 7.78 billion and 3.22 billion years ago, and the William Herschel Telescope, pointing to a light source that emitted light 12.21 billion years ago. If each telescope observed light that was slightly bluer than a reference color, its corresponding detector would measure the light’s polarization in one setting. If the light was slightly redder, then the detector would use the other setting.

In a test of 30,000 pairs of particles, their polarizations correlated too closely to be explained by one of these local hidden variable theories, according to the paper published in Physical Review Letters. That means that any hidden force that could have influenced both particles would have needed to happen billions of years ago to somehow influence the way scientists measured these particles here on Earth. Or, the more likely explanation is that quantum mechanics remains spooky at a distance and can’t be explained by hidden variables. It appears that Einstein was wrong about this one.

The researchers took care to account for astronomical things that might have biased their measurements. For example, they chose a color of light to measure that wouldn’t be absorbed by interstellar gas, and they ensured that they took gravity and the universe’s expansion into account, explained Kaiser. The second, similar experiment, also published in Physical Review Letters, also observed the higher-than-classical correlations, bolstering both papers’ evidence.

Quantum mechanics’ weirdness continues to boggle minds. This weirdness is at the heart of the emerging field of quantum computers, which rely on entanglement in order to perform their calculations. Said Kaiser: “These devices are built on the assumption that entanglement is real.”

Scientists can perhaps further refine these tests by using of light from even deeper into the universe.

It’s the job of physicists to test the laws of physics and ensure that they continue not to break. I hope by now it’s become utterly clear: In quantum physics, spookiness is a given.