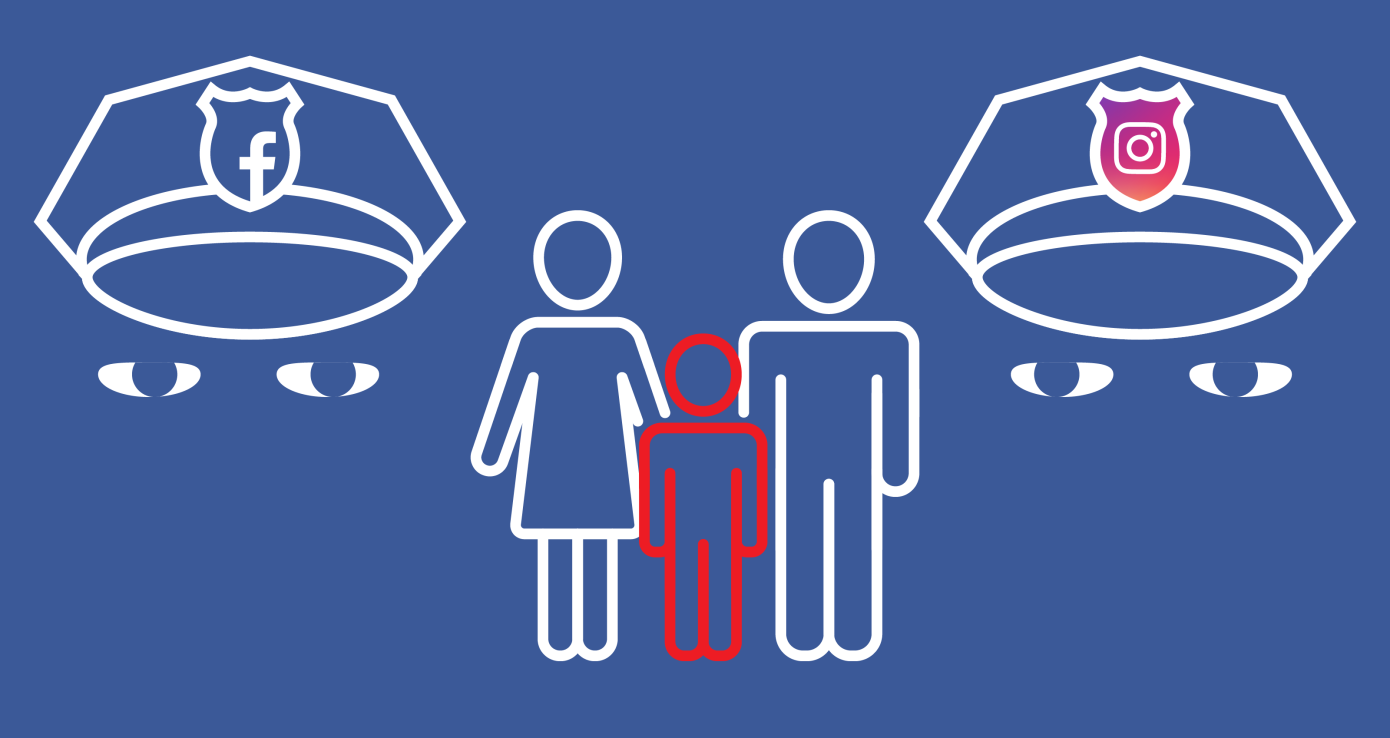

Facebook and Instagram will more proactively lock the accounts of users its moderators encounter and suspect are below the age of 13. Its former policy was to only investigate accounts if they were reported specifically for being potentially underage. But Facebook confirmed to TechCrunch that an “operational” change to its policy for reviewers made this week will see them lock the accounts of any underage user they come across, even if they were reported for something else, such as objectionable content, or are otherwise discovered by reviewers. Facebook will require the users to provide proof that they’re over 13, such a government-issued photo ID, to regain access. The problem stems from Facebook not requiring any proof of age upon signup.

A tougher stance here could reduce Facebook and Instagram’s user counts and advertising revenue. The apps’ formerly more hands-off approach allowed them to hook young users so by the time they turned 13, they had already invested in building a social graph and history of content that tethers them to the Facebook corporation. While Facebook has lost cache with the youth over time and as their parents joined, Instagram is still wildly popular with them and likely counts many tweens or even younger children as users.

The change comes in response to an undercover documentary report by the U.K.’s Channel 4 and Firecrest Films that saw a journalist become a Facebook content reviewer through a third-party firm called CPL Resources in Dublin, Ireland. A reviewer there claims they were instructed to ignore users who appeared under 13, saying “We have to have an admission that the person is underage. If not, we just like pretend that we are blind and that we don’t know what underage looks like.” The report also outlined how far-right political groups are subject to different thresholds for deletion than other Pages or accounts if they post hateful content in violation of Facebook’s policies.

In response, Facebook published a blog post on July 16 claiming that that high-profile Pages and registered political groups may receive a second layer of review from Facebook employees. But in an update on July 17, Facebook noted that “Since the program, we have been working to update the guidance for reviewers to put a hold on any account they encounter if they have a strong indication it is underage, even if the report was for something else.”

Now a Facebook spokesperson confirms to TechCrunch that this is a change to how reviewers are trained to enforce its age policy for both Facebook and Instagram. This does not mean Facebook will begin a broad sweep of its site hunting for underage users, but it will stop ignoring those it comes across. Facebook’s spokesperson stressed that its terms of service that already bar underage users remain the same, but the operational guidance given to moderators for enforcing that policy has changed.

Facebook prohibits users under 13 to comply with the U.S. Child Online Privacy Protection Act, which requires parental consent to collect data about children. The change could see more underage users have their accounts terminated. That might in turn reduce the site’s utility for their friends over or under age 13, making them less engaged with the social network.

The news comes in contrast to Facebook purposefully trying to attract underage users through its Messenger Kids app that lets children ages 6 to 12 chat with those approved by their parents, which today expanded beyond the U.S. to Mexico, Canada and Peru. With one hand, Facebook is trying to make under-13 users dependent on the social network… while pushing them away with the other.

Child signups lead to problems as users age

A high-ranking source who worked at Facebook in its early days previously told me that one repercussion of a hands-off approach to policing underage users was that as some got older, Facebook would wrongly believe they were over 18 or over 21.

That’s problematic because it could make minors improperly eligible to see ads for alcohol, real-money gambling, loans or subscription services. They’d also be able to see potentially offensive content such as graphic violence that only appears to users over 18 and is hidden behind a warning interstitial. Facebook might also expose their contact info, school and birthday in public search results, which it hides for users under 18.

Users who request to change their birth date may have their accounts suspended, deterring users from coming clean about their real age. A Facebook spokesperson confirmed that in the U.S., Canada and EU, if a user listed as over 18 tries to change their age to be under 18 or vice versa, they would be prompted to provide proof of age.

Facebook might be wise to offer an amnesty period to users who want to correct their age without having their accounts suspended. Getting friends to confirm friend requests and building up a profile takes time and social capital that formerly underage users who are now actually over 13 might not want to risk just to able to display their accurate birth date and protect Facebook. If the company wants to correct the problem, it may need to offer a temporary consequence-free method for users to correct their age. It could then promote this option to its youngest users or those whom algorithms suggest might be under 13 based on their connections.

Facebook doesn’t put any real roadblock to sign up in front of underage users beyond a self-certification that they are of age, likely to keep it easy to join the social network and grow its business. It’s understandable that some 9- or 11-year-olds would lie to gain access. Blindly believing self-certifications led to the Cambridge Analytica scandal, as the data research firm promised Facebook it had deleted surreptitiously collected user data, but Facebook failed to verify that.

There are plenty of other apps that flout COPPA laws by making it easy for underage children to sign up. Lip-syncing app Musical.ly is particularly notorious for featuring girls under 13 dancing provocatively to modern pop songs in front of audiences of millions — which worryingly include adults. The company’s CEO Alex Zhu angrily denied that it violates COPPA when I confronted him with evidence at TechCrunch Disrupt London in 2016.

Facebook’s reckoning

The increased scrutiny brought on by the Cambridge Analytica debacle, Russian election interference, screen-time addiction, lack of protections against fake news and lax policy toward conspiracy theorists and dangerous content has triggered a reckoning for Facebook.

Yesterday Facebook announced a content moderation policy update, telling TechCrunch, “There are certain forms of misinformation that have contributed to physical harm, and we are making a policy change which will enable us to take that type of content down. We will be begin implementing the policy during the coming months.” That comes in response to false rumors spreading through WhatsApp leading to lynch mobs murdering people in countries like India. The policy could impact conspiracy theorists and publications spreading false news on Facebook, some of which claim to be practicing free speech.

Across safety, privacy and truth, Facebook will have to draw the line on how proactively to police its social network. It’s left trying to balance its mission to connect the world, its business that thrives on maximizing user counts and engagement, its brand as a family-friendly utility, its responsibility to protect society and democracy from misinformation and its values that endorse free speech and a voice for everyone. Something’s got to give.